Fake news and disinformation in the digital sphere have been trending topics for years, but what do we really know about the public’s relationship to these issues? In partnership with the French organization, Missions Publiques, the Consortium for Science, Policy, & Outcomes (CSPO) recently hosted virtual public deliberations regarding the future of the internet. Participants were drawn from all across the United States. The results from the forum can provide some insight into opinions on this issue. The public has spoken and the results are in, but what do they mean?

When it comes to fake news, Americans are playing a game of Whodunit. We seem to have conceptualized the idea of disinformation as a problem for others and not for ourselves. The public agree that the exposure to and spread of disinformation is a problem, but do not agree on exactly whose problem it is.

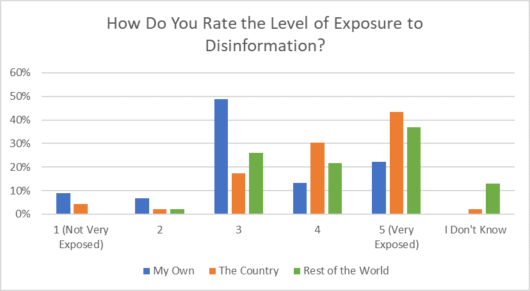

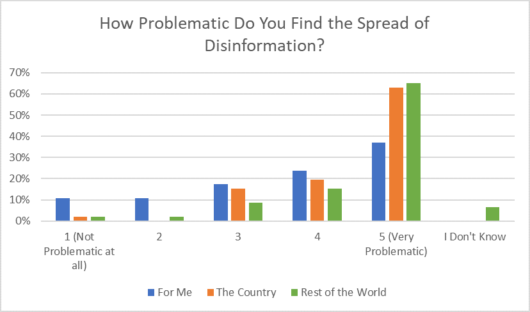

Participants were asked to rate exposure to disinformation on a scale from 1 to 5 (lowest to highest) for themselves, the country, and the world. While 49% rated their personal exposure a 3, 73% rated the country’s exposure a 4 or 5. On the same 1-5 scale they were also asked to rate how problematic the spread of disinformation is for themselves, the country, and the world. Similarly, participants rated disinformation as being a bigger issue for the country than for themselves. While 61% of participants rated it at a 4 or 5 for themselves, 83% rated it at a 4 or 5 for the country. If most people see the issue as someone else’s problem, who then can be held accountable for it?

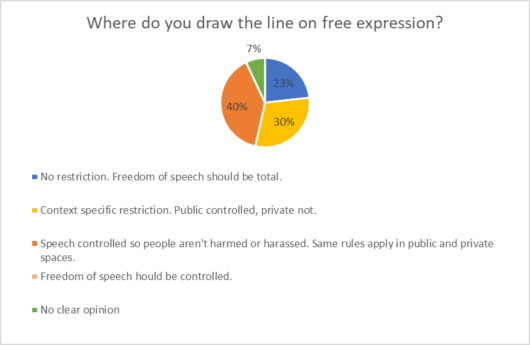

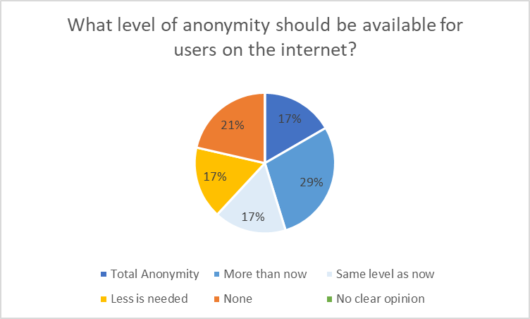

The same sentiment also extended to the questions of freedom of expression and anonymity in the digital public sphere. A majority of participants believe that speech should be controlled (70%), but many also believe that anonymity should be maintained or increased (63%). This is problematic because the two concepts contradict each other. If one does in fact believe that speech should be controlled to prevent harassment and disinformation, then there needs to be a system in place to hold individuals accountable who do not follow those guidelines. Accountability and anonymity are not compatible. You cannot hold someone accountable whom you cannot identify.

It’s almost as if Americans are experiencing a societal version of the bystander effect on a giant scale. We see the spread of fake news and we see its damaging effects, but just like bystanders, our perceived sense of responsibility is reduced because we know others are also watching and participating. The anonymity that digital spaces offer eases the internal sense of responsibility that results from knowing your words can be traced back to you.

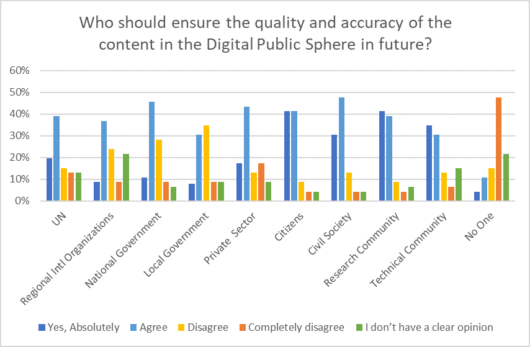

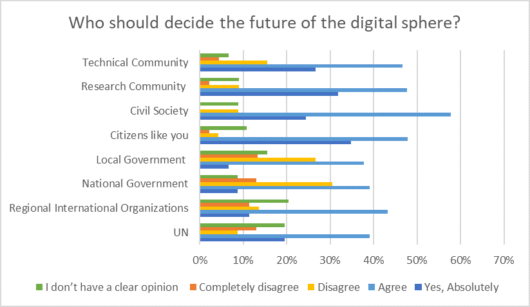

So how do we determine the future of digital public sphere or, in other words, the internet? Who should decide and who should ensure the quality and accuracy of the information published on these platforms? The public deliberated both of these questions. They were presented with 10 different model options then asked to discuss and rate each of them on a scale from 1 (yes, absolutely) to 4 (completely disagree). The results can be seen in the charts below.

One critique of these questions is that they essentially ask for a “yes” or “no” answer. The public gave majority “yes” votes for most of the models, making it more difficult to draw conclusions on preferences. In future forums, it would be helpful to alter the design of the question to a ranking system to gain more information.

Some social media companies are constructing ways to provide solutions to the spread of inaccurate or offensive content. Facebook has constructed an oversight body of 20 jurors from around the world to monitor Facebook and its speech policies. It’s called Facebook’s Supreme Court. Their job is to make the tough decisions, like whether that ‘cat meme’ you saw was just funny or could be considered hate speech. Twitter has incorporated new labels and warnings to detect misinformation, but only regarding disputed or misleading information related to COVID-19. These efforts are a start, but they are not enough.

What does this mean for future deliberations regarding the digital public sphere and the spread of disinformation? This issue merit revisiting. The final question in deliberation session asked participants if they would change their behavior in the future when it comes to getting information and publishing information on the internet. While the majority said yes, there were no specific comments on how they would approach changing their behavior. A follow up deliberation with the same group of people could provide us with more insight on how their behavior and opinions on the issues have changed since the discussion. If not, it would still prove useful to come back to this discussion with a new group and further delve into ways that we can improve the management of the content and interactions in the digital public sphere.

Ekedi Fausther-Keeys is an intern at the Consortium for Science, Policy & Outcomes.